By Frédéric–Ismaël Banville

During the recent PhilMiLCog conference – Western’s graduate conference in philosophy of mind, language and cognitive science, the university welcomed eight graduate student speakers and three keynote speakers: Prof. Edouard Machery (Pittsburgh), Prof. Jacqueline Sullivan (Western- Philosophy) and Prof. Jody Culham (Western- Brain and Mind Institute). As a member of the organizing committee, I would be remiss if I did not thank each of our speakers for their fantastic talks but, while it would be interesting do review all of the talks, this post will focus on the talks given by Jody Culham and Jacqueline Sullivan, as not only did they highlight important issues related to scientific practice, but they were complementary in their focus.

Prof. Jody Culham’s lab at Western’s Brain and Mind Institute investigates the neural basis of reaching and grasping, the impact of focal lesions to specific brain areas on these processes, and the interaction of these motor processes with other processes such as object identification and visual processing more generally. The lab combines functional imaging techniques (e.g., fMRI) with behavioural tasks. While fMRI is a powerful tool, it does come with certain restrictions, one of them being the very constrained space within the scanner, which limits the possible tasks that subjects can carry out. One consequence of this is that it has become conventional to use 2-D images of objects to study object recognition, with the images displayed on a screen inside the scanner. Although the use of 2-D images is standard practice in fMRI studies, there is reason to call this practice into question and this is precisely what Culham and BMI postdoctoral fellow Jacqueline Snow (who led the work presented in the talk) are doing.

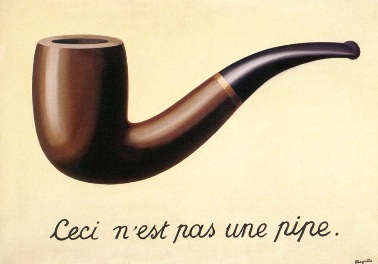

As Culham pointed out, the Belgian surrealist painter René Magritte was ahead of researchers on this, as his famous 1928 painting The Treachery of Images (La trahison des images) highlighted the difference between the representation of an object and the object itself.

The Treachery of Images, by René Magritte (1928-29)

Magritte’s insight that the representation of an object is not the object itself seems fairly intuitive and even obvious. It is thus puzzling that virtually all research done on object recognition uses 2-D pictures or photographs of objects. A natural question, then, is whether the same neural processes are involved when an individual encounters real three-dimensional (3-D) objects in contrast to 2-D images. The standard assumption is that 2-D images and real 3-D objects are accompanied by the same patterns of activation in the brain. To restate Magritte’s insight in terms of the question that preoccupies Culham and the members of her lab, one may wonder if 2-D pictures are ecologically valid to study object recognition, that is, are 2-D images a good way to study how we recognize objects that are 3-D and that we can manipulate? Culham’s intuition is that this is not the case, which would translate to a lower correlation between 2-D images and real objects (in terms of neural activation) than what neuroscientists usually suppose. This alternative hypothesis is supported by pilot results from studies conducted on five subjects, which show differential activation of the lateral occipital cortex, an area involved in object recognition, when subjects are presented with real objects.

As I mentioned above, however, fMRI studies come with important constraints. In the present case, the most obvious one is that the space within the scanner is limited, thus making it difficult to study the differences between real objects and 2-D images. Derek Quinlan and Jim Ladich, technicians attached to the BMI, provided a solution to this problem, by building the DROID (delivery of real objects for imaging device) system, a plastic conveyor belt that delivers both images and real objects to subjects during fMRI imaging. This device enabled Culham and Snow to find out that photos and real objects actually evoke different activation patterns (for more details see Snow et al., 2011).

This research provides a reason to call into question a widely accepted methodological assumption, but notice how this development was highly dependent on the ingenuity of individual researchers, who managed to come up with a way to “bring real objects in the scanner”, so to speak. This relates to the concerns highlighted in Prof. Jacqueline Sullivan’s talk.

Sullivan started by describing current work in the philosophy of neuroscience that construes explanation in the field as the search for the mechanisms responsible for certain phenomena (Craver, 2009). One important feature of Craver’s account is the idea of a mosaic unity of science. On this view, neuroscience can be united through the concerted efforts of various researchers focusing on specific aspects of the brain, the models and explanations of some providing constraints for the work of others. To adopt Craver’s terminology, researchers attempt to move from how-possibly models and explanations (provisional explanations that suggest possible ways in which a phenomenon could occur) to how-actually models and explanations (which describe the actual working of the mechanisms). In other words, Craver’s view (see also Piccinini & Craver, 2011) is that the neurosciences are progressing towards a form of unity, that is to be understood (broadly) in two ways. First, the different sub-disciplines (e.g. neurobiology and cognitive neuroscience) provide partial explanations that are unified by the fact they account for various aspects of the brain in a complementary way. Second, explanations at one level provide constraints for explanations at other levels.

One of the salient points of Sullivan’s talk was that if neuroscience was indeed progressing in the direction of the kind of mosaic unity that Craver puts forward, such progress would be accompanied by some form of standardization of experimental practice. However, as Sullivan remarks (see also Sullivan, 2010) such methodological standardization does not seem to occur. This links up quite clearly with Culham’s talk. Indeed, the work being done by Culham, Snow and their colleagues shows that current standard practices in neuroscience are problematic, and not questioning them could lead to an entrenchment of counterproductive assumptions, such as the assumption that 2-d images and three-dimensional objects elicit similar responses.

Thus, to answer our titular question: experimental practices should matter quite a bit for philosophers of science. The research done in the Culham lab provides a clear example that differences in experimental practices can, at times, be crucial to understanding how a discipline progresses. While the unity of science in the form of complementary sub-disciplines providing detailed and compatible explanations is certainly something to pursue, neuroscience is still at a stage where methodological diversity is crucial. Premature standardization is not something we should wish for, and paying close attention to experimental practices not only highlights this important idea, but also provides us with the tools for better understanding how such unification may happen.

*Special thanks to Prof. Jacqueline Sullivan for very helpful comments on this post.

Works Cited

- Craver, C. F. (2009). Explaining the Brain. OUP Oxford.

- Piccinini, G., & Craver, C. (2011). Integrating Psychology and Neuroscience: Functional Analyses as Mechanism Sketches. Synthese, 183(3), 283–311.

- Snow, J. C., Pettypiece, C. E., McAdam, T. D., McLean, A. D., Stroman, P. W., Goodale, M. A., & Culham, J. C. (2011). Bringing the real world into the fMRI scanner: Repetition effects for pictures versus real objects. Scientific Reports, 1. doi:10.1038/srep00130

- Sullivan, J. A. (2010). Reconsidering “Spatial Memory” and the Morris Water Maze. Synthese, 177(2), 261–283.