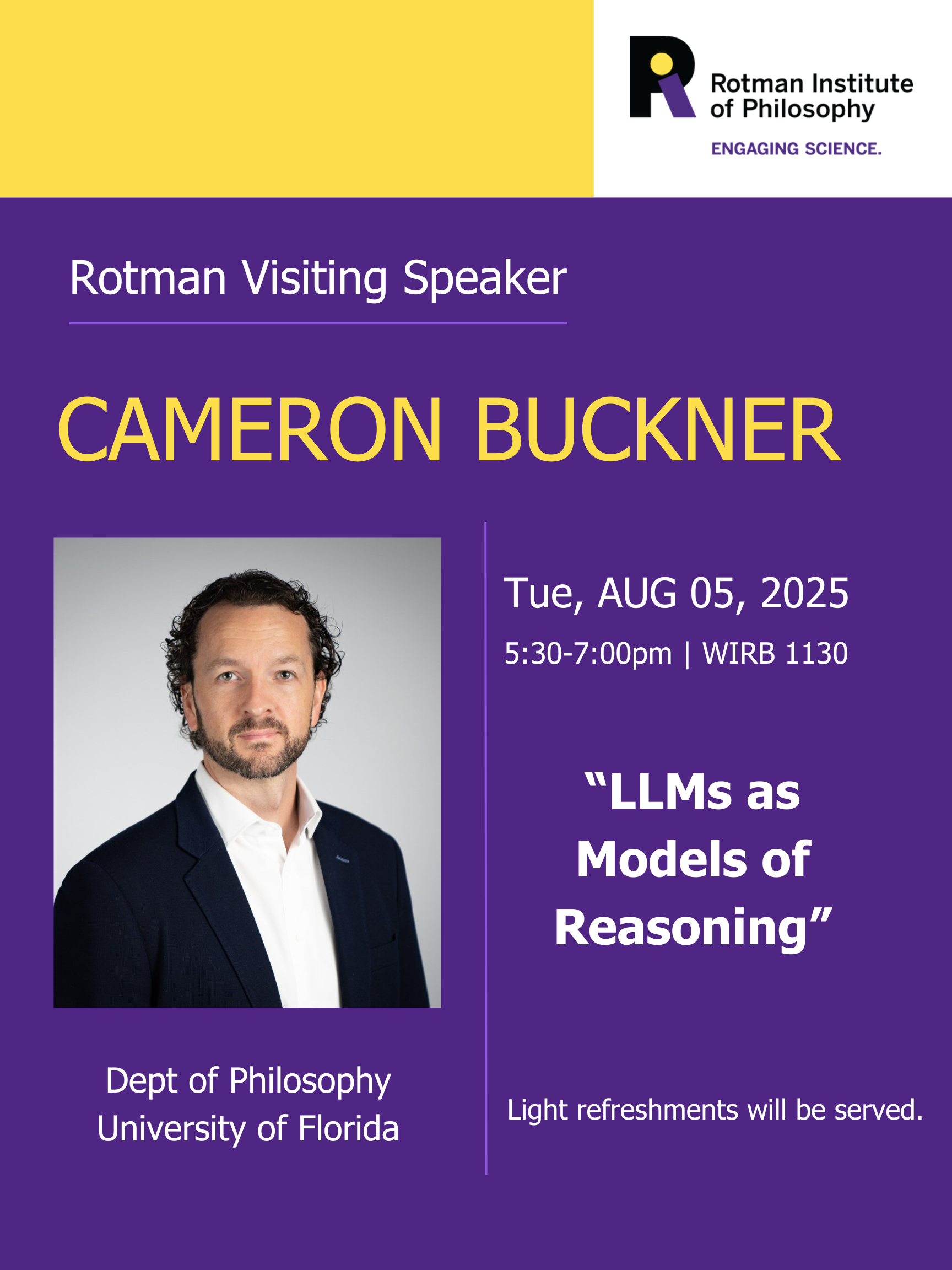

Rotman Visiting Speaker: Cameron Buckner

5 August 2025, 5:30 pm - 7:00 pm EDT

“LLMs as Models of Reasoning”

Abstract: Recent advances in large language models that use self-prompting like GPT’s o1/o3 and DeepSeek have begun to encroach on human-level performance on “higher reasoning” problems in mathematics, planning, and problem-solving tasks. OpenAI in particular has made ambitious claims that these models are reasoning and that by scrutinizing their chains of self-prompting, we can “read the minds” of these models, with obvious implications for solving problems of opacity and safety. In this talk, I review four different methodological approaches to evaluate the success of these models as models of human reasoning (“psychometrics”, “signature limits”, “inner speech”, and “textual culture”), focusing especially on comparisons to philosophical and psychological work on “inner speech” in human reasoning. I argue that this work suggests that while the achievements of self-prompting models are impressive and may make their behavior more human-like, we should be skeptical that problems of transparency and safety are solved by scrutinizing chains of self-prompting, and more philosophical and empirical work needs to be done to understand how and why self-prompting improves the performance of these models on reasoning problems.

Bio: Cameron Buckner is a Professor and the Donald F. Cronin Chair in the Humanities at the University of Florida. His research primarily concerns philosophical issues which arise in the study of non-human minds, especially animal cognition and artificial intelligence. He began his academic career in logic-based artificial intelligence. This research inspired an interest into the relationship between classical models of reasoning and the (usually very different) ways that humans and animals actually solve problems, which led him to the discipline of philosophy. He received a PhD in Philosophy at Indiana University in 2011 and an Alexander von Humboldt Postdoctoral Fellowship at Ruhr-University Bochum from 2011 to 2013. Recent representative publications include “Empiricism without Magic: Transformational Abstraction in Deep Convolutional Neural Networks” (2018, Synthese), and “Rational Inference: The Lowest Bounds” (2017, Philosophy and Phenomenological Research)—the latter of which won the American Philosophical Association’s Article Prize for the period of 2016–2018. He just published a book with Oxford University Press that uses empiricist philosophy of mind (from figures such as Aristotle, Ibn Sina, John Locke, David Hume, William James, and Sophie de Grouchy) to understand recent advances in deep-neural-network-based artificial intelligence.

Attendance is free; for more information, please contact Andrew Richmond at arichmo8@uwo.ca

Light refreshments will be served post-talk.